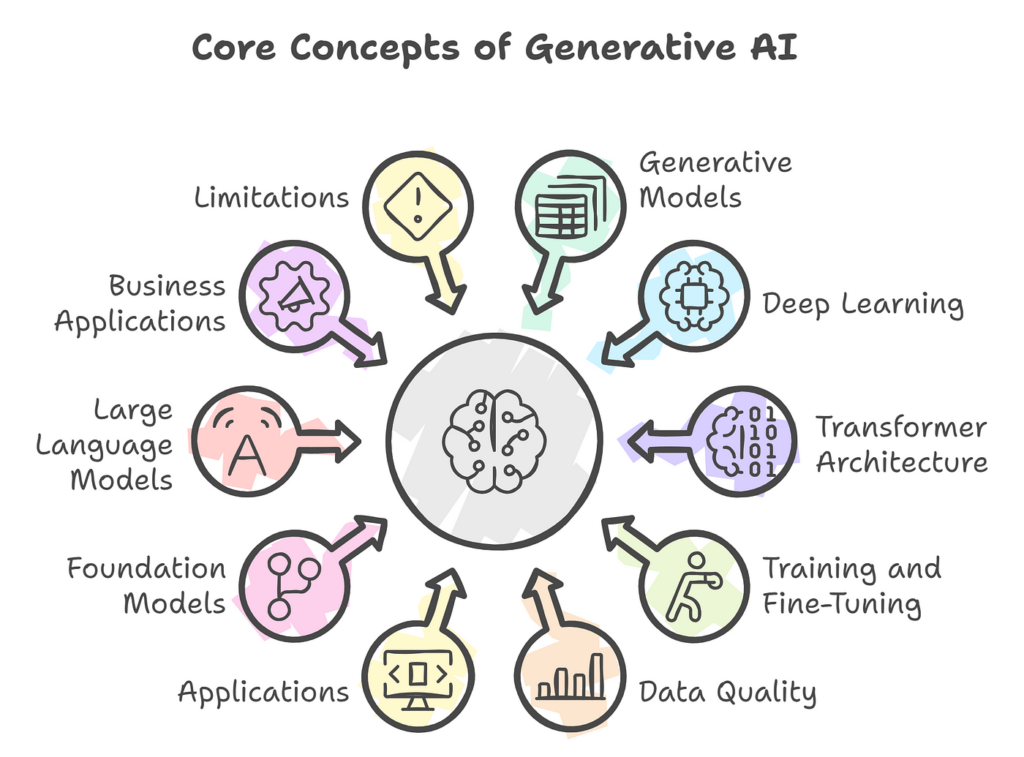

How Generative AI Works: Understanding the Technology

Generative AI leverages advanced algorithms and machine learning techniques to create data, mimic human-like tasks, and solve complex problems. Here’s a detailed explanation of the foundational technologies behind generative AI:

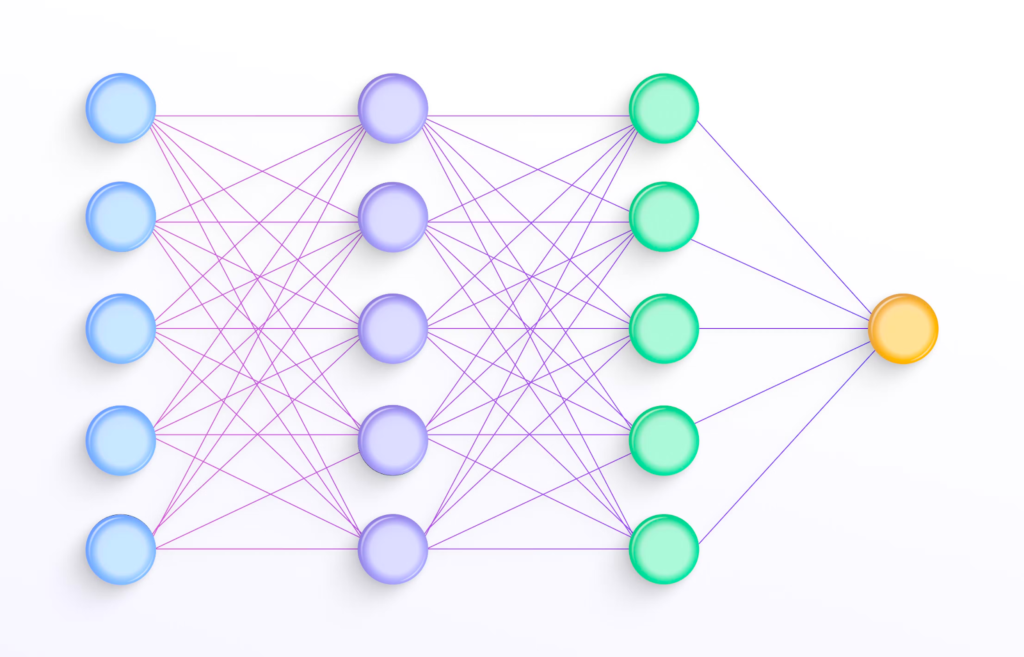

1. Neural Networks: The Backbone of Generative AI

Neural networks are computational models inspired by the human brain. They consist of interconnected layers of nodes (neurons) designed to process data and learn patterns.

- How They Work: Neural networks analyze vast datasets, identifying complex patterns through multiple layers of processing. Each layer extracts specific features, such as edges in an image or patterns in text, to create meaningful outputs.

- Role in Generative AI: Neural networks enable generative AI to learn from existing data and create new, original outputs like text, images, or audio.

2. Generative Adversarial Networks (GANs)

GANs are a specific type of neural network architecture designed for generative tasks. They consist of two components working in tandem:

- The Generator: Creates fake data (e.g., images, videos, or text) based on the training data.

- The Discriminator: Evaluates the generated data against real data and provides feedback to improve the generator’s performance.

This adversarial process continues until the generator produces outputs indistinguishable from real data.

- Applications: GANs are widely used for creating realistic images, deepfake videos, and even generating synthetic datasets.

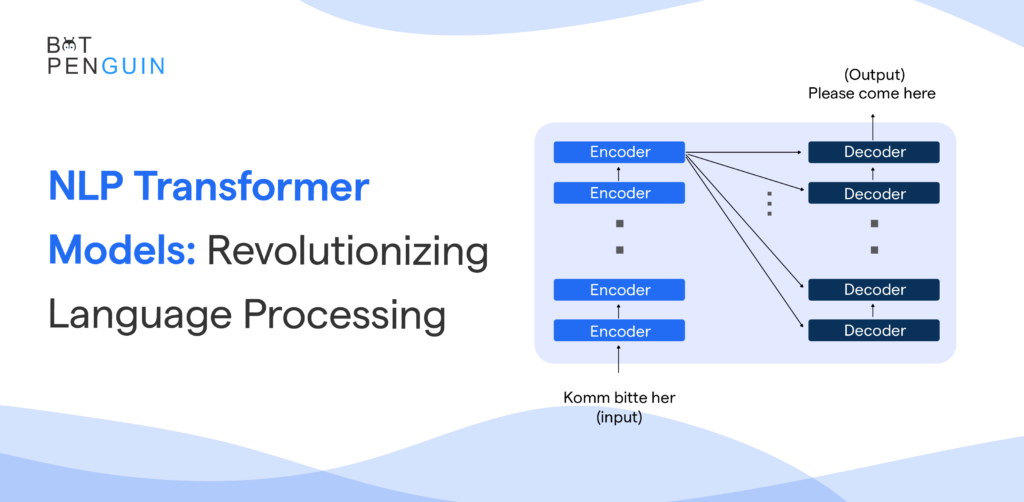

3. Transformer Models: Revolutionizing Text and Language Generation

Transformers, like GPT (Generative Pre-trained Transformer) and BERT, have redefined how AI handles language-related tasks.

- How They Work: These models process data sequentially while attending to all parts of the input, enabling them to understand context and relationships between words or data points.

- Applications: Transformers power text generation, translation, summarization, and even coding assistance.

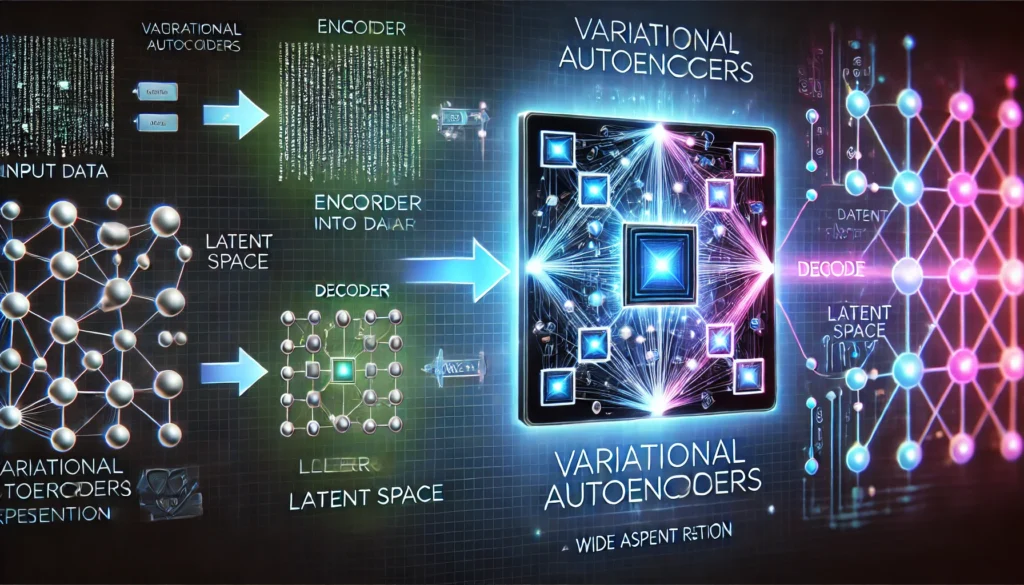

4. Variational Autoencoders (VAEs)

VAEs are another type of neural network used in generative tasks.

- How They Work: VAEs encode input data into a compressed format, learn its essential features, and then decode it back into a similar but potentially modified version.

- Role in Generative AI: VAEs excel in generating realistic variations of data, such as altering images or synthesizing new ones.

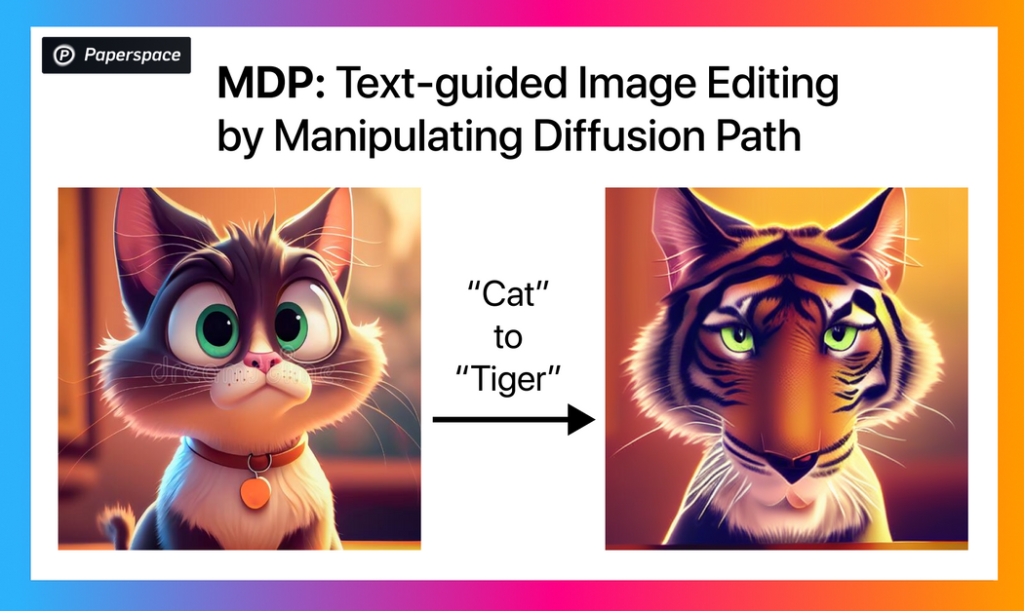

5. Diffusion Models: A Rising Star in Generative AI

Diffusion models are a newer technology that works by gradually adding noise to data and then reversing the process to generate new content. These models have recently gained prominence for generating ultra-realistic images.

Applications of Diffusion Models:

- High-quality image generation (e.g., DALL-E, Stable Diffusion).

- Video and animation creation.

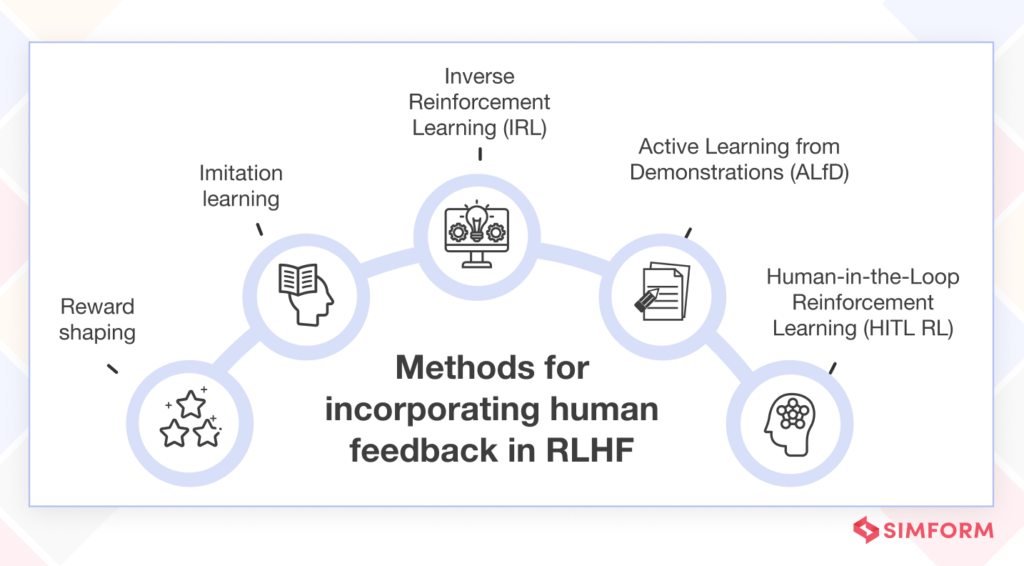

6. Reinforcement Learning with Human Feedback (RLHF)

Reinforcement learning allows AI to improve its generative capabilities by learning from feedback. With RLHF, AI systems receive guidance from human experts to fine-tune their outputs, ensuring they align with user preferences and ethical standards.

Applications of RLHF:

- ChatGPT-style conversational agents.

- Decision-making systems for gaming or simulations.

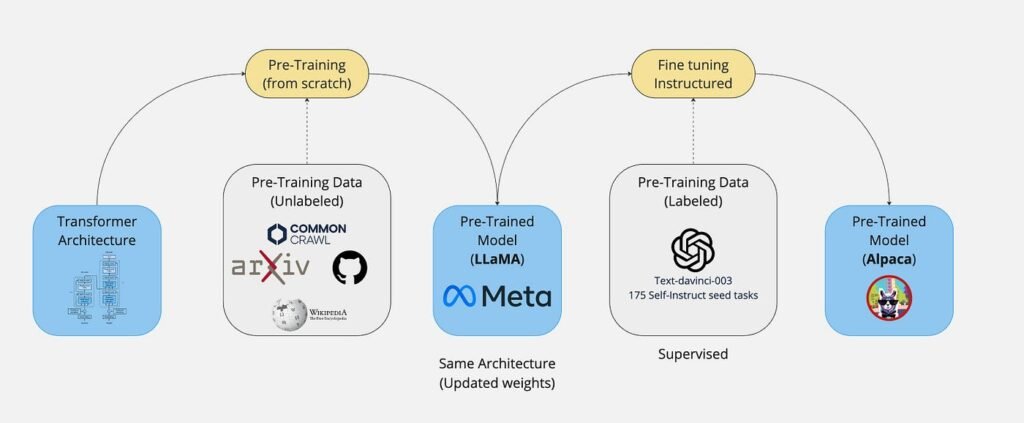

7. Pre-training and Fine-tuning

Generative AI models undergo two major phases of learning:

- Pre-training: The model learns from large datasets to understand patterns, structures, and context.

- Fine-tuning: The model is further refined on specific datasets to tailor its outputs for targeted tasks.

8. Training Data: The Backbone of Generative AI

The quality and diversity of training data play a critical role in the performance of generative AI models. Models are trained on vast datasets, ranging from text and images to audio and videos, to learn patterns and generate realistic outputs.

Challenges and Limitations

While generative AI is powerful, it faces challenges like:

- Bias in Training Data: Can lead to biased outputs.

- High Computational Costs: Requires significant resources for training.

- Ethical Concerns: Deepfakes and misinformation are potential risks.

Generative AI’s advanced technologies—neural networks, GANs, transformers, and more—are unlocking new frontiers in creativity, automation, and problem-solving. As the field evolves, its applications and possibilities will continue to grow, shaping the future of innovation.

Learn more about generative AI technologies and their applications on GenerativeAI.com.