How Generative AI Works: A Comprehensive Beginner’s Guide to Retrieval Augmented Generation (RAG)

Want to listen to this article in/as a Podcast?

Artificial Intelligence (AI) has grown by leaps and bounds over the past few years, and one of its most exciting advancements is Generative AI. This technology is no longer confined to research labs or tech giants—individuals and companies of all sizes are using it to create realistic text, stunning images, engaging music, and even computer code. If you’ve ever used ChatGPT or seen AI-generated artwork, you’ve already experienced Generative AI in action.

However, there’s another term you might have come across: Retrieval Augmented Generation (RAG). RAG supercharges Generative AI by letting it fetch the latest, most relevant information in real time. Imagine you’re writing a research paper on last week’s scientific discoveries—you want an AI assistant that can generate well-structured summaries and reference newly published data. That’s where RAG comes in.

By the time you finish reading, you will not only understand how Generative AI works and what RAG is, but also how the two together can create some truly impressive applications. Whether you’re a complete beginner or a tech-savvy enthusiast, this post will guide you step by step.

What Is Generative AI?

Before we dive into RAG, let’s unpack the basics of Generative AI itself. The word “generative” hints at its core function: to create new and original content. Traditional AI systems often follow strict rules or make predictions based on previously learned data. Generative AI, on the other hand, goes a step further. It identifies patterns in large datasets and uses those patterns to produce fresh output—whether that be text, images, or other forms of media.

1.1 The Shift from Traditional AI to Generative AI

• Traditional AI (Discriminative or Predictive Models): These models are typically designed to classify or label data. For example, a predictive model might decide whether an email is spam or not. The output is usually a category or numerical value.

• Generative AI: Rather than focusing on labels, Generative AI focuses on creativity. Once it learns from large datasets (e.g., millions of documents, images, or even audio samples), it can synthesize entirely new material. This makes it ideal for tasks like writing essays, composing music, or creating art.

1.2 Real-World Examples of Generative AI

• ChatGPT or GPT-based Models: These can generate coherent text responses to prompts, acting like a virtual writing assistant or brainstorming partner.

• Midjourney and DALL·E: These AI tools create images from text prompts, often producing art that ranges from realistic to abstract.

• GitHub Copilot: Assists developers by generating code snippets on the fly, expediting the software development process.

1.3 Why Is Generative AI Important?

1. Boosts Productivity: Automates repetitive tasks, such as drafting emails or coding boilerplate functions, freeing humans to focus on bigger-picture thinking.

2. Expands Creativity: Suggests ideas you might not have thought of, whether for content creation, design concepts, or storytelling.

3. Personalization: Generates tailored experiences in real time—like chatbots that adapt their tone or product recommendations in e-commerce.

How Generative AI Works: A Step-by-Step Breakdown

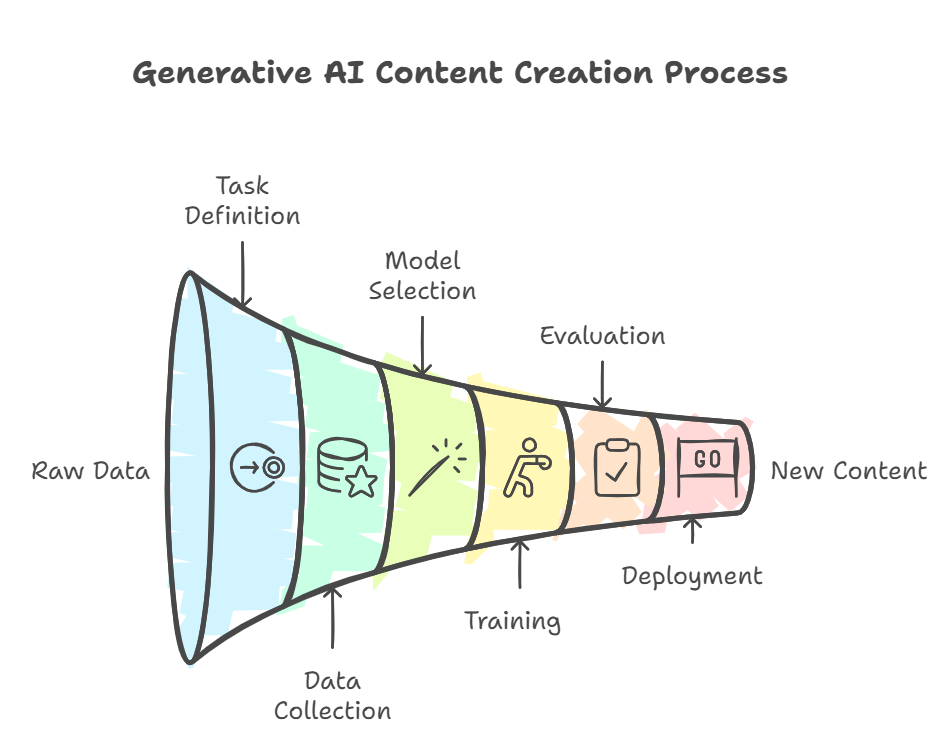

You might be wondering: How exactly does Generative AI go from raw data to brand-new content? While it can get pretty technical under the hood, the process can be broken down into more manageable steps.

1. Task Definition

Before any AI does its magic, you have to decide what you want it to do. Are you trying to generate articles, create images, or produce snippets of code? Clarity on your end-goal will help you select the right data and the right model.

2. Data Collection and Preparation

- Data is King in AI. If you’re training a model to generate marketing copy, you need a broad dataset of well-written marketing materials. If you’re focusing on code generation, you’ll gather and clean code repositories.

- Data Cleaning is a crucial step: removing duplicates, correcting errors, and ensuring consistency. Garbage in, garbage out holds true—if your dataset is poor quality, your model’s output will suffer.

3. Model Selection

Several types of AI models can be used for generation. Nowadays, Transformer-based architectures (like GPT) are common because they excel at understanding context

4. Training

- Once you’ve chosen a model architecture, you feed it your dataset so that it can learn patterns, vocabulary, style, or any other relevant features.

- For text-based models, they learn word associations, grammar structures, and writing styles. For image models, they learn shapes, colors, and artistic patterns.

5. Evaluation and Fine-Tuning

- You test your model on a separate set of data not used in training (called a validation set). If the outputs are not good enough, adjustments are made. This can include tweaking learning rates, changing the model’s architecture, or gathering more data.

- Deployment

- Once you’re satisfied with the performance, you make the model available to end-users, often through APIs or user interfaces.

- Real-world feedback helps you refine and improve the model over time.

7. Continuous Improvement

- AI is never “done.” Data shifts, needs evolve, and new techniques emerge. You’ll find yourself iterating on the model to keep it current and effective.

This lifecycle repeats, and as more data becomes available, the model can keep improving. Throughout, it’s essential to note that Generative AI doesn’t merely repeat the exact content it was trained on. Instead, it synthesizes new content by identifying and combining learned patterns in novel ways.

Introduction to Retrieval Augmented Generation (RAG)

Generative AI alone is powerful. However, imagine you have a Generative AI model trained on data up to 2022, and you ask it about an event that happened in 2023. Since the model’s knowledge stops at 2022, it won’t be aware of what occurred in 2023. That’s a problem if your application demands up-to-date information.This is where Retrieval Augmented Generation (RAG) comes in. RAG takes Generative AI to the next level by giving it the capability to retrieve real-time information from external sources. Think of it like having a brilliant writer who can also hop onto the internet to pull the latest facts before crafting an answer.

3.1 Why Do We Need RAG?

1. Up-to-Date Responses: Static models become stale if they don’t continuously retrain on new data. RAG solves this by allowing the model to search for current info whenever needed.

2. Accuracy and Relevance: By retrieving verifiable data, AI-generated answers become more factually correct, especially for time-sensitive questions.

3. Reduced Memory Burden: You don’t need to load the entire internet into your AI model. Instead, the model can fetch details from a dedicated database on demand.

3.2 Core Components of a RAG System

1. Text Processing: Break down text inputs—removing unnecessary characters, splitting it into paragraphs or sentences. The goal is to make the data more manageable.

2. Chunking: Large documents can overwhelm the AI. Chunking divides text into digestible pieces, each dealing with a single main point.

3. Embeddings: These are numerical representations of text meaning. AI models convert words or sentences into vector forms so they can compare their meanings efficiently.

4. Attention Mechanisms: Let the AI focus on the most relevant chunks of text (imagine a teacher reminding you to focus on important parts of a chapter).

5. Vector Database: Stores the numerical embeddings, allowing for fast, advanced searching of relevant text.

6. Retrieval Models: Specialized algorithms (like TF-IDF, BM25, or Dense Retrieval models) that find the text chunks most likely to answer your question.

7. Generative Model Integration: After retrieving the right information, the AI uses that data to form a coherent answer.

Delving Deeper: Retrieval Models vs. Generative Models

4.1 Retrieval Models in Detail

– TF-IDF (Term Frequency–Inverse Document Frequency)

- One of the oldest and most basic retrieval techniques.

- Assigns importance to words based on how frequently they appear in a document compared to the overall document set.

- Benefit: It’s straightforward and quick.

- Drawback: It struggles when synonyms or related terms are used instead of the exact keywords.

– BM25

- An evolution of TF-IDF that takes into account term frequency saturation and document length.

- Benefit: More accurate than basic TF-IDF for text search.

- Drawback: Still heavily reliant on exact keyword matching.

– Dense Retrieval Models (e.g., DPR)

- Use neural networks to convert text into embeddings, capturing semantic meaning.

- Benefit: Far better at picking up context. If your user asks about “heart attacks,” the model knows that “cardiac arrests” are relevant.

- Drawback: More computationally expensive and complex to set up.

4.2 Generative Models in Detail

1.Transformer Architecture

- The state-of-the-art approach for language tasks. Transformers use “self-attention” to handle words in a sequence, enabling them to capture long-range dependencies and context with relative ease.

- Attention Mechanisms

- Picture reading a long document; your attention naturally drifts to parts you find most relevant. AI does the same, focusing computational resources on key segments of text.

- Word Prediction and Decoding

- Generative models create text one word at a time, predicting the next word based on what came before. They keep doing this until they form a complete, coherent output.

By mixing these retrieval and generative elements, RAG systems ensure that the AI not only knows how to write but also has quick access to specific, real-world data.

Building a Simple RAG System: Step-by-Step Guide

You might be excited to try RAG for yourself. Here’s a beginner-friendly outline of how you could build a simple RAG pipeline:

- Gather and Prepare Documents

- Collect documents, articles, or any text files relevant to your domain. For instance, if you’re building a healthcare assistant, you’d gather medical guidelines, clinical studies, etc.

- Chunking

- Split large documents into smaller sections (say, 200–500 words each). This helps the AI process and retrieve the data more effectively.

- Create Embeddings

- Use a library like sentence-transformers (in Python) to convert text chunks into vector embeddings. Each chunk gets transformed into a vector that represents its meaning.

- Vector Database Setup

- Implement a vector database or use a platform like PG Vector or a standalone solution like FAISS. These are specialized for storing and searching through vector embeddings.

- Retrieval Module

- When a user asks a question, convert that query into an embedding. Compare it to the stored embeddings in the vector database to find the most similar (and thus most relevant) text chunks.

- Generative Model Prompting

- Pass the retrieved text chunks (usually 2–5 chunks) into a Generative AI model—like GPT or a similar large language model.

- Give the model a “system prompt” (i.e., an instruction) that explains how it should use the provided chunks. For example: ‘’Use the following text to provide the most accurate answer. Do not include irrelevant information.”

- Response Generation

- The AI will compose a response by integrating its innate language generation capabilities with the retrieved information, leading to a more accurate and up-to-date output.

- Iterate and Improve

- Gather user feedback and keep refining your data, retrieval, and generation processes. This might include adding new sources, adjusting chunk sizes, or fine-tuning the model to better handle domain-specific language.

Choosing Platforms and Tools

6.1 Cloud-Based Solutions

– OpenAI’s ChatGPT or GPT-4

- Straightforward to set up. You can call the API with your prompts and get quick results.

- Perfect for rapid prototyping or smaller projects.

- Microsoft Azure Cognitive Services

- Offers various AI services, including text analytics and custom model deployment.

- Google Cloud AI

- Features tools like AutoML for training custom models with less coding.

6.2 Self-Hosted or On-Premise Solutions

– Hugging Face Transformers

- An open-source platform with a wide selection of pre-trained models and user-friendly APIs.

- You can download entire models locally, ensuring better control over data privacy.

- LangChain

- A framework for “chaining” multiple components of language-based applications, making it simpler to build RAG pipelines.

- PG Vector

- A PostgreSQL extension for storing embeddings directly in your database.

- Ideal for smaller to medium projects where integration with relational data is key.

- PG Vector Scale

- A more advanced version designed for large-scale, disk-based storage of vector embeddings.

- Great for enterprise applications or very large datasets.

Making the Most of RAG: Tips for Beginners

- Prompt Engineering

- How you phrase your question or request can drastically affect the AI’s response. Experiment with different prompts, and consider using templates like:

“Summarize the following text in a simple manner…”

“Answer the user query using only the information provided. The user query is: …”

- Clearly specify the AI’s “role” and constraints, ensuring it remains on topic.

- Leverage AI as a “Collaborator”

- Don’t treat AI as just a machine that spits out answers. Treat it like a brainstorming partner—pose multiple questions, ask it to refine or reformat answers, and guide it toward better outputs.

- Maintain Context with Chat History

- In chat interfaces, keep track of the conversation’s context so your AI won’t “forget” previous queries and answers. This makes interactions more natural and efficient.

- Iterate Often

- AI models, especially Generative AI, thrive on iteration. Ask questions, gauge the quality of answers, and fine-tune until you reach the desired level of performance.

- Stay Informed

- The AI field evolves quickly. New research, new models, and new techniques appear regularly. Keep an eye on AI communities, blogs, and open-source contributions to stay at the cutting edge.

Real-World Applications: Where RAG Shines

1. Customer Support

- Large companies often have extensive knowledge bases. RAG can help chatbots find the right answer to a specific query, rather than the chatbot relying solely on outdated training data.

- Content Creation and Research

- Writers and researchers can tap into up-to-date references. For example, a journalist working on a breaking news story could quickly pull accurate stats or quotes.

3. Healthcare

- Doctors and nurses can retrieve the latest guidelines and research findings when making patient care decisions. Instead of combing through journals manually, they can query a system that knows exactly where to look.

4. Legal Document Analysis

- Lawyers dealing with massive case files can use RAG to zero in on the relevant statutes and past cases, then produce summaries for easy review.

5. Academic Research

- Students and professors can navigate thousands of papers to find relevant articles. The system can then synthesize a succinct overview of those articles.

Addressing Ethical and Responsible AI Use

With great power comes great responsibility. Generative AI and RAG are shaping how we interact with information, but they also raise important ethical questions:

1. Data Bias

- If your training data is skewed—lacking representation across different regions, languages, or demographics—your AI’s outputs may exhibit bias. Always review and diversify data sources.

2. Misuse of Fake Media

- The same tech that creates helpful summaries can also generate deepfakes or false information. Verify sources and consider watermarking AI-generated content where appropriate.

3. Privacy Concerns

- RAG often involves real-time data retrieval. Ensure you’re following data protection laws, especially if sensitive user data might appear in search results.

4. Hallucinations

- Generative models sometimes produce information that sounds plausible but is factually incorrect. By integrating retrieval steps, RAG mitigates this risk but doesn’t eliminate it entirely. Always cross-verify critical information.

5. Transparency and Accountability

- Users have a right to know when they’re interacting with an AI and how their data is used. Maintain transparent policies and allow for user feedback.

Looking Ahead: The Future of Generative AI and RAG

Generative AI and RAG are evolving at lightning speed. Research labs and tech companies keep introducing breakthroughs in model efficiency, context handling, and creative capabilities. Here’s what to expect in the near future:

1. More Domain-Specific Model

- Instead of large, general-purpose models, you’ll see smaller, highly specialized ones fine-tuned for legal, medical, or engineering tasks. This specialization leads to better accuracy in niche areas.

2. Higher Context Windows

- Future models will handle longer input contexts, meaning you can feed entire books or lengthy documents to the AI, and it will still keep track of the details.

3. Real-Time Collaboration

- Imagine multiple AI systems working together: one focusing on retrieval, one on generation, and another on fact-checking. This synergy could dramatically boost accuracy and speed.

4. Regulatory Frameworks

- Governments and international bodies are increasingly scrutinizing AI. Expect more guidelines and regulations concerning data handling, ethical use, and transparency.

5. New Forms of Interaction

- Voice and augmented reality interfaces may become standard for RAG systems, letting users interact with AI via wearable devices or smart glasses in a seamless, hands-free way.

Conclusion: Embrace the Power of Generative AI and RAG

We’ve journeyed from understanding how Generative AI works—with its emphasis on pattern recognition and creativity—to seeing how Retrieval Augmented Generation (RAG) extends that capability by fetching the latest, most relevant information. By combining these elements, you get AI systems that are both imaginative and accurate, making them well-suited for a host of practical applications—customer service bots, research assistants, content generators, and so much more.

For beginners, the main takeaway is that you don’t need to be an AI scientist to use these tools. Many platforms and open-source projects have lowered the barriers to entry. With a clear goal, a decent dataset, and a willingness to experiment, you can leverage Generative AI and RAG to tackle problems once thought impossible for machines to handle.

For seasoned AI enthusiasts, digging into advanced retrieval techniques and fine-tuning large models can open new frontiers in performance and scale.

We’re witnessing the dawn of a new era—one where humans and AI collaborate seamlessly, each complementing the other’s strengths. Whether you’re brainstorming novel business ideas, summarizing complex research, or simply exploring cutting-edge technology, there’s no better time than now to dive into the world of Generative AI and RAG.

If you have questions or insights on how Generative AI works—or want to share success stories using RAG—feel free to leave a comment below. Happy experimenting, and here’s to a future powered by ever-smarter, ever-more-helpful AI!